Regression Analysis in Data Mining

Aug 16, 2024

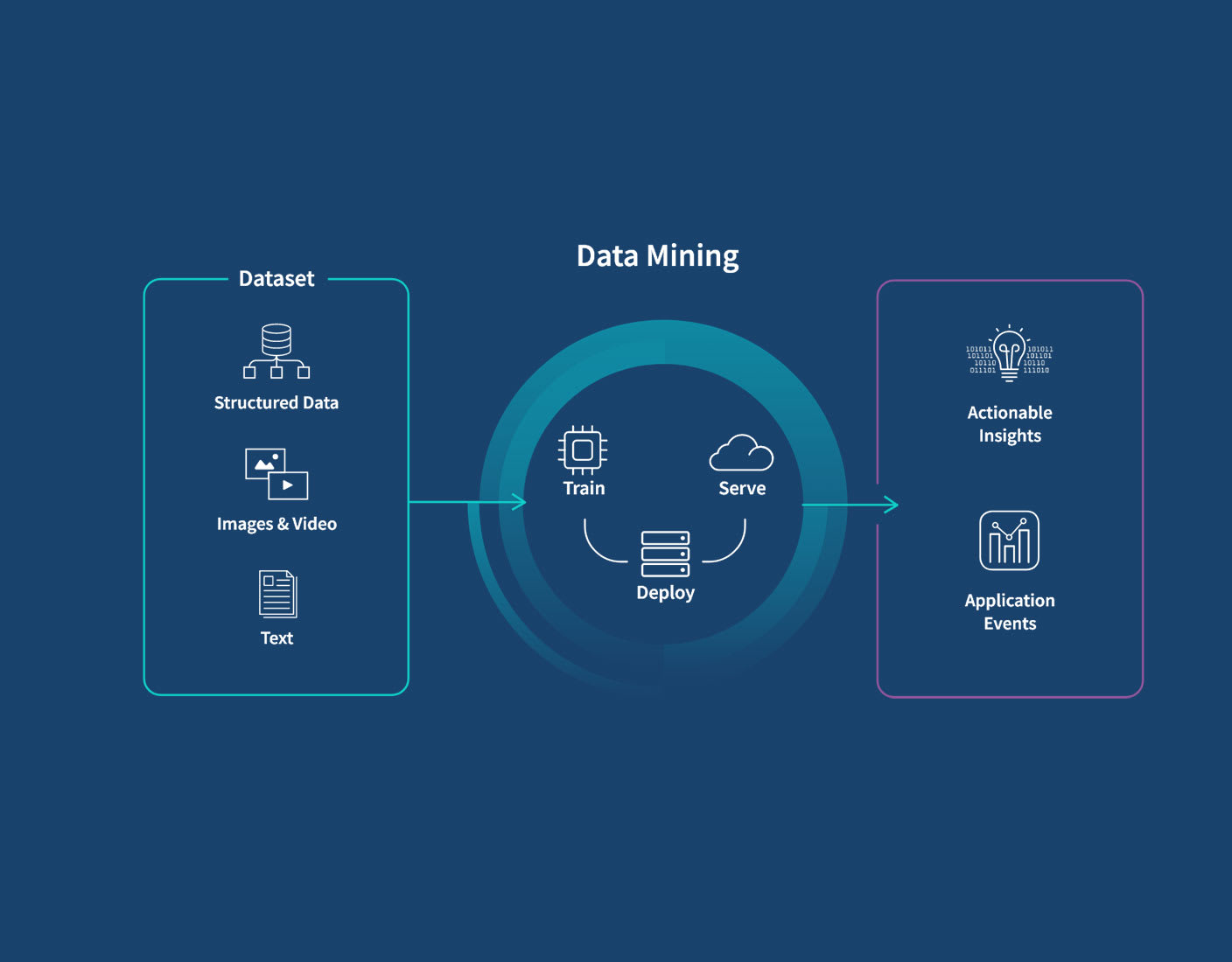

Regression analysis is a crucial technique in data mining that helps in predicting numerical values based on the relationships between dependent and independent variables. This blog post will delve into the fundamentals of regression analysis in data mining, explore its various types, applications, and provide practical coding examples to illustrate its implementation.

What is Regression Analysis in Data Mining?

Regression analysis is a statistical method used to model and analyze the relationships between a dependent variable (the outcome we are trying to predict) and one or more independent variables (the predictors). The primary goal is to understand how the value of the dependent variable changes when any one of the independent variables is varied while the others are held fixed.

For example, if we want to predict the price of a house based on its size, number of bedrooms, and location, the price is the dependent variable, and the size, number of bedrooms, and location are the independent variables. By analyzing historical data, we can build a regression model that predicts the price of a new house based on its attributes.

Importance of Regression Analysis in Data Mining

Regression analysis plays a vital role in various fields, including:

Business and Marketing: Predicting sales, customer behavior, and market trends.

Finance: Estimating stock prices, risk assessment, and financial forecasting.

Healthcare: Analyzing patient data to predict outcomes and treatment effectiveness.

Social Sciences: Understanding relationships between variables in survey data.

Types of Regression Techniques

There are several types of regression techniques used in data mining, each suited for different types of data and relationships.

1. Linear Regression

Linear regression is the most commonly used regression technique. It assumes a linear relationship between the dependent and independent variables. The equation for a simple linear regression model can be expressed as:

Y=a+bX+e

Where:

YY is the dependent variable.

aa is the intercept.

bb is the slope of the regression line.

XX is the independent variable.

ee is the error term.

Example Code Snippet in Python:

2. Multiple Linear Regression

Multiple linear regression extends simple linear regression by using multiple independent variables to predict the dependent variable. The equation can be represented as:

Y=a+b1X1+b2X2+...+bnXn+e

WhereX1,X2,...,XnX1,X2,...,Xn are the independent variables.

Example Code Snippet:

3. Logistic Regression

Logistic regression is used when the dependent variable is categorical (e.g., binary outcome). It predicts the probability of the dependent variable taking a particular value based on the independent variables.

Example Code Snippet:

Challenges in Regression Analysis

While regression analysis is a powerful tool, it comes with challenges:

Assumptions: Regression techniques often assume linearity, independence, and normality of errors. Violating these assumptions can lead to inaccurate models.

Overfitting: Creating a model that is too complex can lead to overfitting, where the model performs well on training data but poorly on unseen data.

Multicollinearity: When independent variables are highly correlated, it can affect the stability of the coefficient estimates.

Conclusion

Regression analysis in data mining is an essential technique for predicting and understanding relationships between variables. By utilizing various regression models, data scientists can extract valuable insights from data, aiding decision-making processes across industries.

Incorporating regression analysis into your data mining toolkit can enhance your ability to make data-driven predictions and uncover hidden patterns within your datasets. Whether you are predicting sales, analyzing trends, or assessing risks, understanding and applying regression techniques will significantly benefit your analytical capabilities.

Further Reading

For those interested in deepening their understanding of regression analysis in data mining, consider exploring the following topics:

Advanced regression techniques(e.g., Ridge, Lasso)

By mastering these concepts, you can elevate your data mining skills and apply them effectively in real-world scenarios.