Unlocking the Power of Lasso Regression: A Comprehensive Guide

Aug 17, 2024

Lasso regression, or Least Absolute Shrinkage and Selection Operator, is a powerful statistical technique widely used in predictive modeling and machine learning. By incorporating regularization, Lasso regression simplifies models, enhances interpretability, and prevents overfitting, especially in high-dimensional datasets. This blog post will explore the mechanics of Lasso regression, its advantages, practical applications, and implementation strategies, providing you with the knowledge to leverage this method for robust predictive modeling.

Understanding Regression Analysis

Regression analysis is a statistical method used to examine the relationship between a dependent variable and one or more independent variables. The simplest form is linear regression, which models the relationship using a straight line defined by the equation:

Y=β0+β1X+ϵ

where:

YY is the dependent variable,

XX is the independent variable,

β0β0 is the intercept,

β1β1 is the slope,

ϵϵ is the error term.

The primary goal of regression analysis is to predict the dependent variable's value based on the independent variables and understand the strength and nature of their relationships.

Lasso Regression Overview

Lasso regression enhances linear regression by adding a regularization term to the loss function. This term penalizes the absolute size of the coefficients, effectively shrinking some of them to zero. The mathematical formulation of Lasso regression is:

min(∑i=1n(yi−y^i)2+λ∑j=1p∣βj∣)

where:

yiyi are the observed values,

y^iy^i are the predicted values,

βjβj are the coefficients,

λλ is the regularization parameter.

The term λ∑j=1p∣βj∣λ∑j=1p∣βj∣ imposes a penalty that encourages sparsity in the model, meaning that only the most significant predictors are retained.

Advantages of Lasso Regression

Lasso regression offers several key advantages:

Feature Selection: Lasso automatically selects important features by shrinking some coefficients to zero, which eliminates irrelevant variables from the model. This leads to simpler, more interpretable models.

Prevention of Overfitting: The regularization aspect of Lasso helps prevent overfitting by constraining the size of the coefficients. This results in models that generalize better to new, unseen data.

Handling Multicollinearity: Lasso can manage multicollinearity by selecting one variable from a group of highly correlated variables, reducing redundancy and improving model stability.

High-dimensional Data: Lasso is particularly useful in high-dimensional settings where the number of predictors exceeds the number of observations, such as in genomics and finance.

Improved Prediction Accuracy: By focusing on the most relevant variables and reducing noise, Lasso often enhances prediction accuracy compared to traditional regression models.

Practical Applications of Lasso Regression

Lasso regression is applicable in various fields, including:

Finance: For credit scoring and risk assessment, where many financial indicators may be correlated.

Genomics: In identifying significant genes associated with diseases when the number of genes far exceeds the number of samples.

Marketing: For customer segmentation based on numerous behavioral features.

Implementing Lasso Regression in Python

To implement Lasso regression in Python, you can use the scikit-learn library. Below is a step-by-step guide with code snippets.

Step 1: Import Libraries

Step 2: Load Data

Assuming you have a dataset in a CSV file:

Step 3: Split the Data

Step 4: Standardize the Features

Standardization is crucial for Lasso regression:

Step 5: Fit the Lasso Model

Step 6: Make Predictions

Step 7: Evaluate the Model

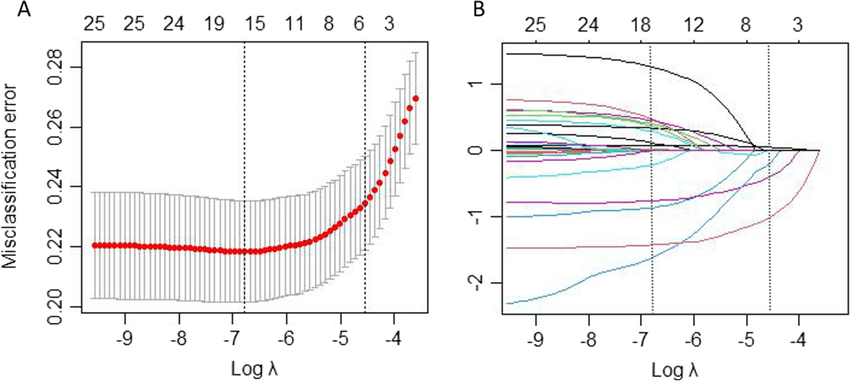

Tuning the Regularization Parameter

The choice of the regularization parameterλλ(oralphainscikit-learn) is critical. You can use cross-validation to find the optimal value:

Conclusion

Lasso regression is a powerful tool for model selection and regularization, particularly in high-dimensional datasets. By automatically selecting significant features and preventing overfitting, it enhances the interpretability and predictive accuracy of models. The implementation in Python usingscikit-learnis straightforward, making it accessible for data scientists and analysts.